Day 15 - #90DaysOfDevOps Challenge

Python is the go-to language for automation in the DevOps world, making it an essential skill for every DevOps engineer. In this article, we will discuss the Python libraries that are indispensable for DevOps professionals. Additionally, we will explore the power of handling JSON and YAML files in Python, along with practical tasks related to these topics.

Python Libraries for DevOps Engineers

Python Libraries :

A Python library, also known as a module, is a collection of pre-written code that offers a wide range of functionalities and tools. It provides ready-to-use functions, classes, and methods that can be imported into your Python programs to extend their capabilities inorder to solve specific problems or perform certain tasks.

For example, if you need to do complex calculations, there's a library for that. If you want to manipulate and analyze data, there's a library for that too. Whether you're working with numbers, text, graphics, or networks, there's usually a library available to make your life easier. Instead of writing everything from scratch, you can import a library into your code and use its functions to get things done quickly. It's like having a collection of tools in your programming toolbox, ready to be used whenever you need them.

Now, let's talk about the most important Python libraries used in DevOps-

1) Ansible: A powerful automation tool that uses Python as its primary language. Ansible allows you to define infrastructure as code and automate configuration management, application deployment, and orchestration.

2) Boto3: The official AWS SDK for Python, which provides easy access to various Amazon Web Services (AWS) resources. It enables you to automate interactions with AWS services, manage cloud resources, and deploy applications.

3) Docker SDK for Python: This library allows you to interact with Docker containers and manage containerized applications programmatically. It provides an interface to create, deploy, and manage Docker containers and images.

4) Pytest: A testing framework that simplifies writing and running tests in Python. It offers a concise syntax, powerful assertions, and test discovery features, making it popular for automated testing in DevOps workflows.

5) GitPython: A library for interacting with Git repositories programmatically. It enables you to perform Git operations like cloning repositories, committing changes, and managing branches, making it useful for version control automation.

6) Prometheus Client: A Python library for instrumenting applications and exposing metrics in the Prometheus format. It allows you to monitor your applications and infrastructure, making it valuable for observability in DevOps practices.

There are many more Python libraries available that cater to different aspects of DevOps, including infrastructure provisioning, monitoring, log management, and continuous integration/continuous deployment (CI/CD) pipelines. The choice of libraries depends on specific requirements and the tools and technologies used in your DevOps workflows.

Modules:

In Python, a module is a file that contains Python code. It serves as a way to organize and reuse code by grouping related functions, classes, and variables together.

A module can be as simple as a single Python script or a collection of multiple scripts. It provides a way to encapsulate code into separate files, making it easier to manage and maintain larger programs. Modules can be imported into other Python scripts or programs using the

importstatement. Once imported, you can access the functions, classes, and variables defined in the module and use them in your code.Examples of modules in Python include

os,sys,json,yaml. These modules provide various functions and classes that can be used by importing them into your Python code.

In Python, the terms "library" and "module" are closely related but refer to slightly different concepts:

A module is a single file that contains Python code. It can include functions, classes, variables, and other code elements. Modules can be imported into your Python scripts or programs using the import statement.

Whereas, Libraries are typically composed of multiple modules that work together to provide a comprehensive set of features. Libraries are usually distributed as packages, which can be installed using package managers like pip. Once installed, you can import and use the library's modules in your code.

JSON and YAML in PYTHON

JSON (JavaScript Object Notation) and YAML (YAML Ain't Markup Language) are two popular data serialization formats used in Python for storing and exchanging data.

JSON:

JSON is a lightweight and popular format.

It uses key-value pairs to represent data.

Python's

jsonmodule helps convert JSON data to Python objects (json.loads()) and Python objects to JSON data (json.dumps()).JSON is commonly used for data exchange between systems.

Example:

import json

# JSON data

json_data = '{"Car": "Tesla", "Model": "Cybertruck", "Year": 2023}'

# Converting JSON to Python object

python_obj = json.loads(json_data)

print(python_obj)

# Converting Python object to JSON

json_str = json.dumps(python_obj)

print(json_str)

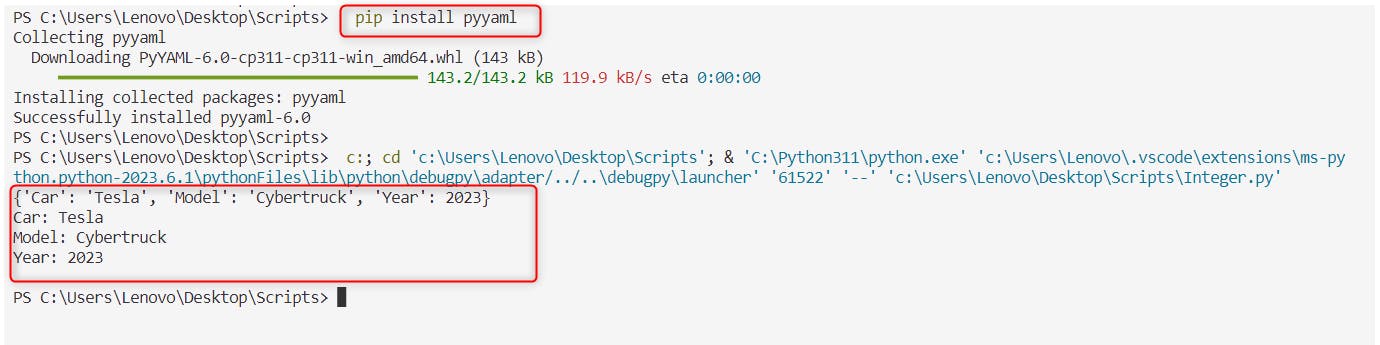

Output:

In the JSON example, we use the json module to load the JSON data into a Python object using json.loads(), and then convert the Python object back to JSON using json.dumps().

YAML:

YAML is a human-readable format.

It uses indentation and special characters to represent data.

The

pyyamllibrary in Python is used to handle YAML data.With

pyyaml, you can convert YAML data to Python objects (yaml.load()) and Python objects to YAML data (yaml.dump()).YAML is often used for configuration files.

Example:

- We need to first install the library

pyyamlby using pip command as shown below-

pip install pyyaml

- Now, we can use the

pyyamllibrary to load the YAML data into a Python object usingyaml.load()with a specified loader, and then convert the Python object back to YAML usingyaml.dump().

import yaml

# YAML data

yaml_data = '''

Car: Tesla

Model: Cybertruck

Year: 2023

'''

# Converting YAML to Python object

python_obj = yaml.load(yaml_data, Loader=yaml.Loader)

print(python_obj)

# Converting Python object to YAML

yaml_str = yaml.dump(python_obj)

print(yaml_str)

Output:

Both JSON and YAML serve as convenient ways to store and share data in a structured format. JSON has simpler syntax and wider adoption, while YAML offers more readability and flexibility. The choice depends on your specific needs and preferences.

TASKS

1) Create a Dictionary in Python and write it to a json File.

2) Read a json file

services.jsonkept in this folder and print the service names of every cloud service provider.3) Read YAML file using python, file

services.yamland read the contents to convert yaml to json

Task-1: Create a Dictionary in Python and write it to a json File.

Solution:

We are creating a dictionary named

drinksrepresenting different categories of drinks. The dictionary contains keys like "sodas", "juices", and "teas", and the corresponding values are lists of drink types in each category.Then, we specified the file path where we want to save the JSON file using the variable

file_path. In this case, we use "drinks.json".We should open the file in write mode ("w") using the

open()function and then use thejson.dump()function to write the contents of thedrinksdictionary to the JSON file.The

indent=4argument is used to add indentation for better readability. By setting it to4, the JSON output will be indented by 4 spaces for each level (we will see the difference in case of not using this argument below).Fin(ally, we print a message to indicate that the dictionary has been successfully written to the JSON file.

import json

# Create a dictionary of drink types

drinks = {

"sodas": ["cola", "lemon-lime", "orange"],

"juices": ["apple", "orange", "cranberry"],

"teas": ["green tea", "black tea", "herbal tea"]

}

# Specify the file path

file_path = "drinks.json"

# Write the dictionary to a JSON file

with open(file_path, "w") as json_file:

json.dump(drinks, json_file, indent=4)

print("Dictionary has been written to the JSON file.")

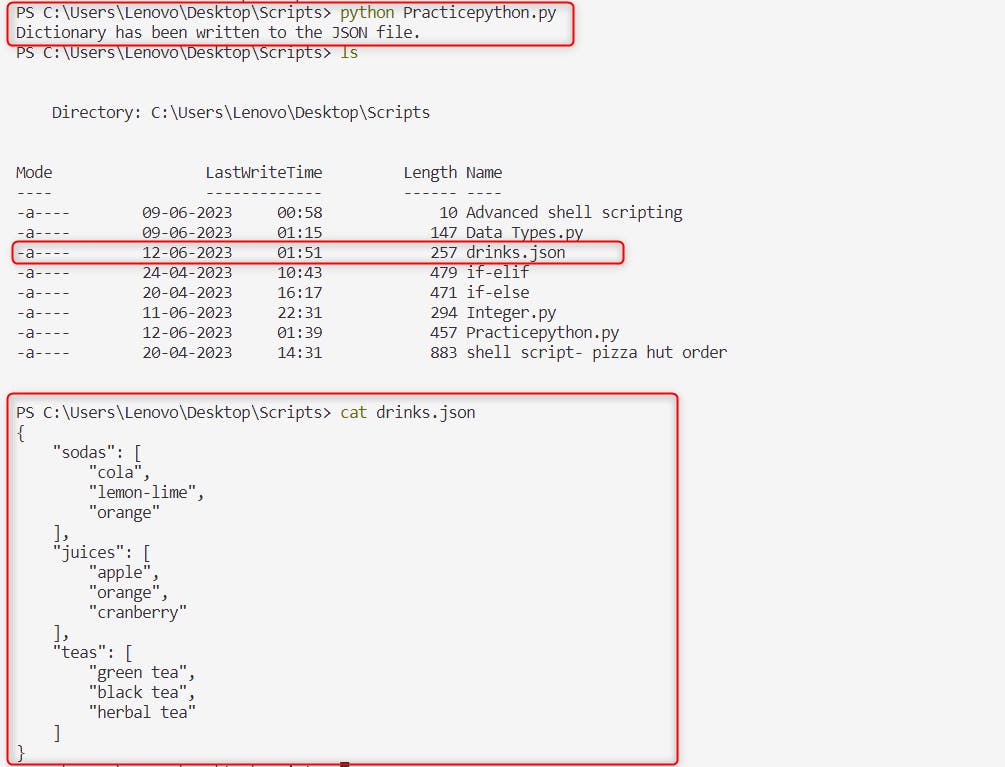

- After running this code, you will find a file named "drinks.json" in the same directory as your Python script, containing the JSON representation of the

drinksdictionary with proper indentation.

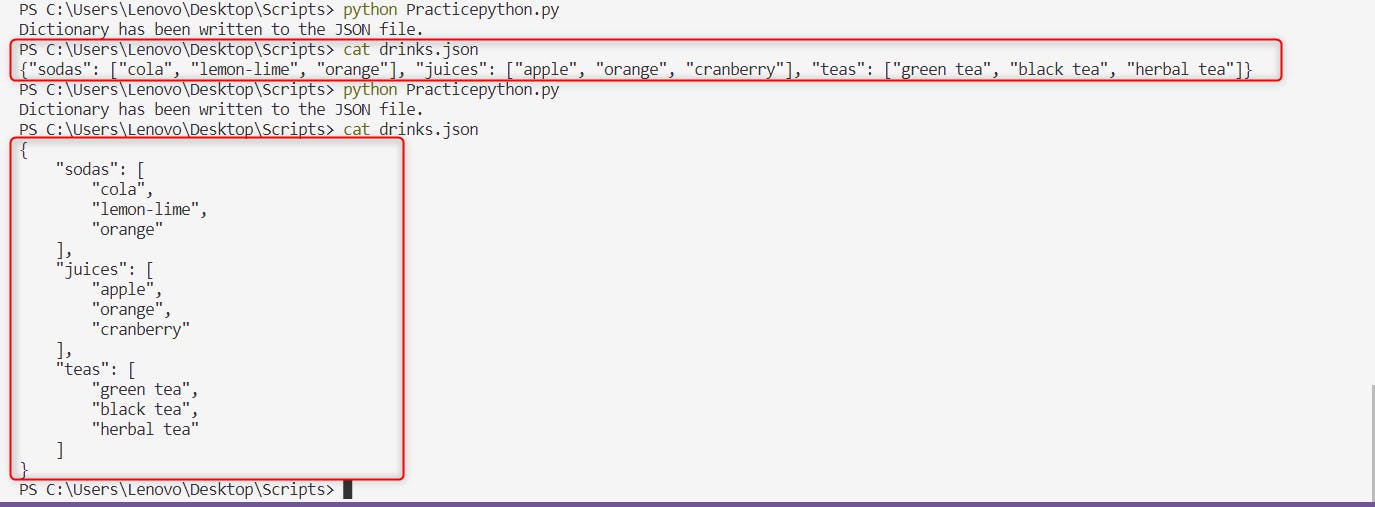

Output:

Now, check out the below output to find the difference between using indentation indent=4 and without using it. In the first highlighted section, we didn't use indentation but in second highlighted section of the output we used it to display the output beautifully.

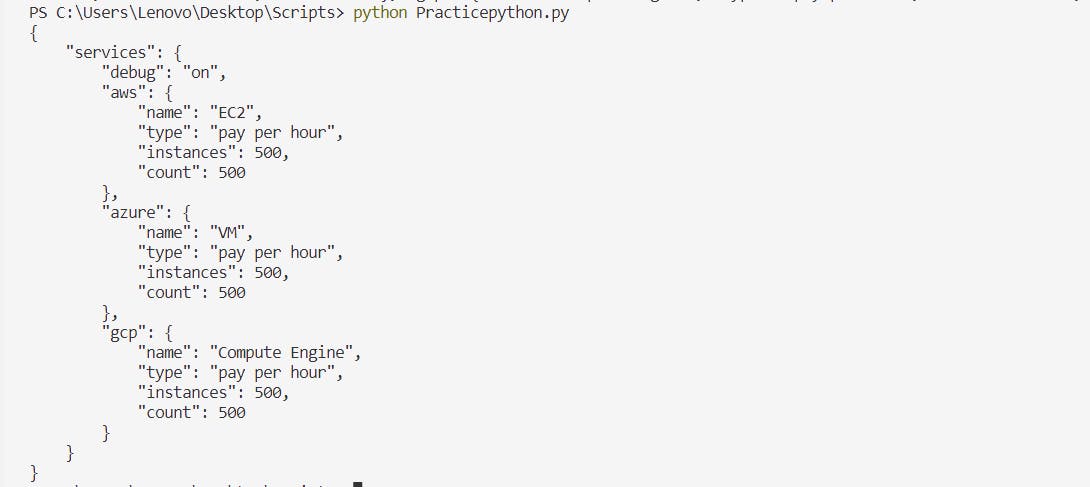

Task-2: Read a json file services.json existing in the folder 2023/day15 of 90DaysOfDevOps Git Repository (service.json file contents given below for reference) and print the service names of every cloud service provider.

services.json File

{

"services": {

"debug": "on",

"aws": {

"name": "EC2",

"type": "pay per hour",

"instances": 500,

"count": 500

},

"azure": {

"name": "VM",

"type": "pay per hour",

"instances": 500,

"count": 500

},

"gcp": {

"name": "Compute Engine",

"type": "pay per hour",

"instances": 500,

"count": 500

}

}

}

Solution:

Firstly, the json module needs to be imported to handle JSON file. Then the open() function is used to open the "services.json" file in read mode, and the with statement ensures that the file is properly closed after reading its contents.

The json.load() function is used to load the JSON contents from the file and parse it into a Python dictionary. The parsed data is stored in the data variable.

You can then access specific values from the JSON structure using dictionary indexing. Here, we are accessing the name values under the aws, azure, and gcp keys within the services dictionary, and printing them.

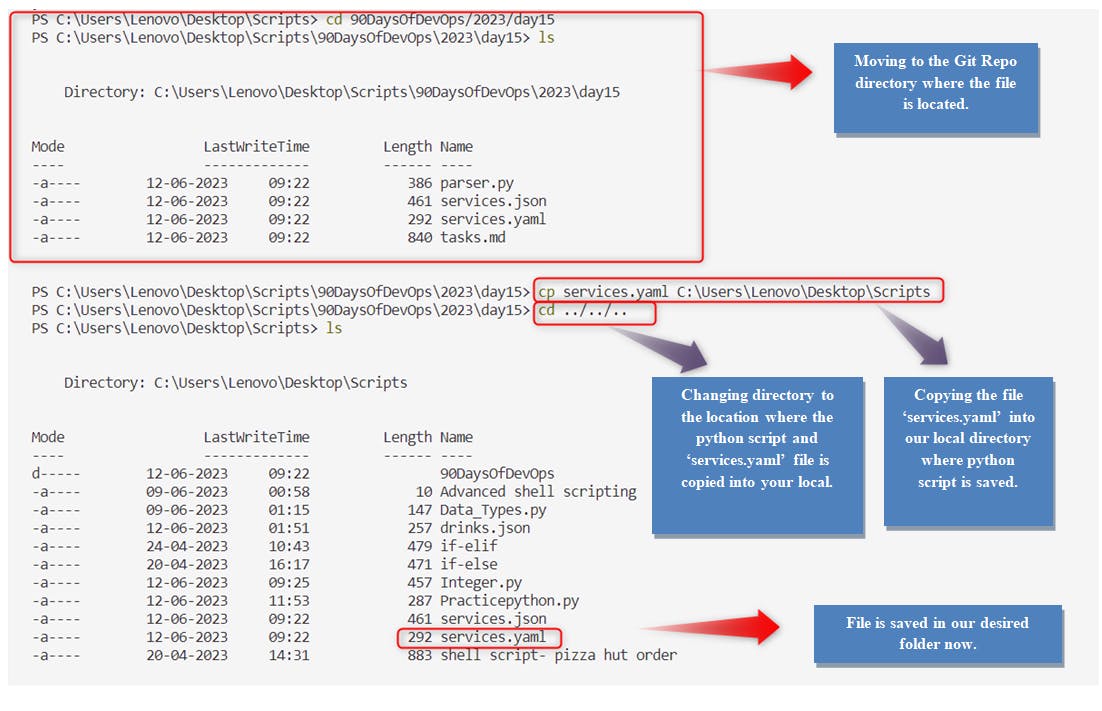

(Note: Ensure the services.json file is copied into the same directory as your Python script)

import json

# Open the services.json file in Read mode

with open('services.json', 'r') as file:

#Load the json contents

data = json.load(file)

# Accessing and printing specific data from the JSON structure

print("aws:", data['services']['aws']['name'])

print("azure:", data['services']['azure']['name'])

print("gcp:", data['services']['gcp']['name'])

Output:

Task-3 : Read YAML file using python, file services.yaml (present in folder 2023/day15 of 90DaysOfDevOps Git Repository )and read the contents to convert yaml to json.

Solution:

As a first step, ensure that you have the

pyyamlandjsonlibraries installed. You can install them using pip with the commands-pip install pyyamlandpip install json.Also, it's important to have the

services.yamlfile is in the same directory as your Python script so make sure to copy the file into your directory, else it will throw errors.

We begin by importing the required libraries

yamlandjson.Next, we are opening the

services.yamlfile in read mode using theopen()function. The file is opened using a context manager (withstatement), which ensures that the file is automatically closed after we are done reading its contents.Inside the

withblock, we useyaml.safe_load(file)to load the contents of the YAML file. This function parses the YAML data and returns a Python object representing the data structure.We then converted the loaded YAML data to JSON format using

json.dumps(yaml_data, indent=4). Thejson.dumps()function takes the YAML data as input and converts it to a JSON-formatted string. Theindent=4parameter ensures that the resulting JSON string is indented by 4 spaces for each level.Finally, we print the JSON data using

print(json_data). This will display the converted JSON data on the console with proper indentation.

import yaml

import json

# Open the YAML file in read mode

with open('services.yaml', 'r') as file:

# Load the YAML contents

yaml_data = yaml.safe_load(file)

# Convert YAML to JSON

json_data = json.dumps(yaml_data, indent=4)

# Print the JSON data

print(json_data)

Output:

Congratulations! We have accomplished all the tasks for today and explored important topics like Python libraries and modules. I trust that you have found this article to be informative and valuable. If that's the case, I would greatly appreciate it if you could show your support by giving it a Like. Your feedback and suggestions are always welcome as they help me improve and provide better content. Don't hesitate to share your thoughts in the comments section below. Thank you for joining me on this learning journey!